Idle hands

For the want of something better to do (okay, because procrastination), a pass at webscraping Wikipedia. For fun. I’m going to use it’s “Random Page” to sample pages, and then extract the edit history (looking at how often edited, and when). Let’s say we’re interested in getting an idea of the distribution of interest in editing pages.

See update: tidier code.

I’m going to use Emacs lisp for the web scraping.

OK, Wikipedia links to a random page from the Random Page link in the lefthand menu. This is a URL:

https://en.wikipedia.org/wiki/Special:Random

How random is this page? See https://en.wikipedia.org/wiki/Wikipedia:FAQ/Technical#random

Scraping code

The little function below uses url-retrieve-synchronously to retrieve the page, into an emacs buffer, and then uses regular-expression search to extract the title of the page.

(defun getrandompage () (set-buffer (url-retrieve-synchronously "https://en.wikipedia.org/wiki/Special:Random")) (re-search-forward "<title>\\(.+\\) - Wikipedia</title>" nil t) (match-string 1))

A page’s edit-history is available at this URL

https://en.wikipedia.org/w/index.php?title=PAGENAME&offset=&limit=5000&action=history

Normally, there are links for varying numbers edits shown per page, up to a max of 500, but I’ve put 5000 in as the limit, and it seems to work.

The edits are in a HTML list, and can be picked up with a regexp of the following form:

"<li data-mw-revid=.+</li>"

The following function takes a page name, and returns a list of the edit entries, with the name of the page at the top of the list.

(defvar history-url "https://en.wikipedia.org/w/index.php?title=%s&offset=&limit=5000&action=history") (defun gethistory (page) (let (results) (save-mark-and-excursion (set-buffer (url-retrieve-synchronously (format history-url page))) (while (re-search-forward "<li data-mw-revid=.+</li>" nil t) (push (match-string 0) results)) (cons page results))))

There is lots of information in the edit entry (date, user, size of resulting file, change in file size, comment, on top of URLs to compare versions). I just want dates, which I capture with this regexp:

"mw-changeslist-date[^>]+?>\\([^<]+\\)"

The following block puts this all together, writing the results to a tab-delimited file for further processing. Putting everything in a dotimes loop is crude and fragile (any error and the whole batch of data is lost; a more professional approach would catch and deal with errors) but it’s simple. It also ties up the Emacs process, so it is probably best done in a separate process (or --batch style).

(defun parse-wiki-edit (slug) (string-match "mw-changeslist-date[^>]+?>\\([^<]+\\)" slug) (match-string 1 slug)) ;; Do it 1000 times, store in a tab-delimited CSV (with-temp-buffer (insert "pagetitle\tedittime\n") (dotimes (i 1000) (setq page (save-excursion (gethistory (getrandompage)))) (setq title (car page)) (mapcar (lambda (x) (insert (format "%s\t%s\n" title (format-time-string "%Y-%m-%d-%T" (encode-time (parse-time-string (parse-wiki-edit x))))))) (cdr page))) (write-file "/tmp/wiki.csv"))

Some results

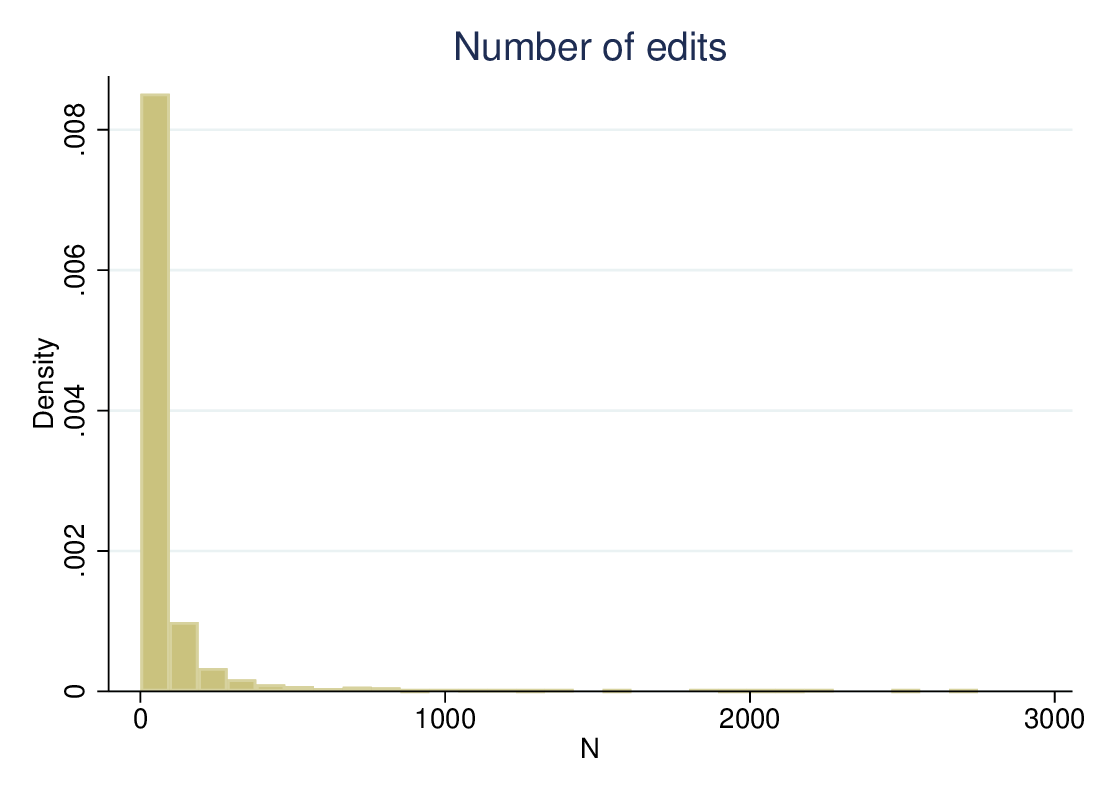

I switch to Stata to analyse the resulting file (you can use anything you like, as long as it’s not Excel. We see an average of about 100 edits per page, but the range is from 1 to almost 2750:

Variable | Obs Mean Std. Dev. Min Max

----------+--------------------------------------------

N | 996 103.1697 265.6243 1 2748

An extract from the page frequency table gives a flavour of the eclecticism of Wikipedia:

. tab pagetitle, sort

pagetitle | Freq. Percent Cum.

----------------------------------------+------------------------

Mitt Romney 2012 presidential campaign | 2,748 2.67 2.67

Resident Evil: Afterlife | 2,466 2.40 5.07

Cartoon Network (Philippines) | 2,255 2.19 7.27

Adamson University | 2,226 2.17 9.43

World War II casualties of the Soviet.. | 2,176 2.12 11.55

Cumans | 2,056 2.00 13.55

IndyCar Series | 1,950 1.90 15.45

Syrian Democratic Forces | 1,881 1.83 17.28

Australian Army | 1,835 1.79 19.07

Padmanabhaswamy Temple | 1,829 1.78 20.85

List of Cluedo characters | 1,538 1.50 22.34

[ . . . ]

Podosinovets, Kirov Oblast | 1 0.00 100.00

Stuart Fitzsimmons | 1 0.00 100.00

Ust-Morzh | 1 0.00 100.00

----------------------------------------+------------------------

Total | 102,757 100.00

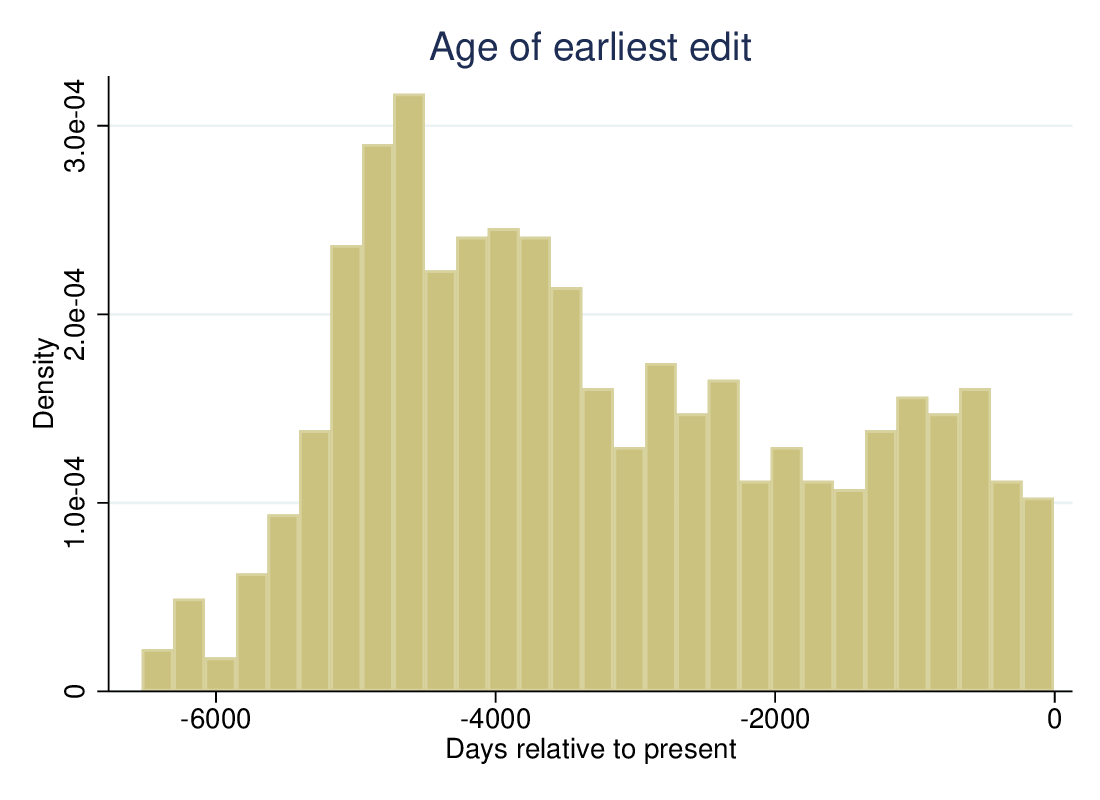

Here is a picture of how long ago the earliest edit was: